If you haven’t played with LiveView yet, you should. LiveView is part of Phoenix (Rails-on-Elixir, basically) and helps developers turn simple full stack apps into “rich, real-time user experiences”. It was the first serious HTML-over-websockets implementation and arguably the most important feature in Phoenix.

HTML-over-websockets sounds weird. Why would you want to do that? We already have the JAMstack, there are hundreds of JavaScript frameworks for building dynamic applications. If you want to build a wicked app, wouldn’t it make sense to start a React app and build a GraphQL API to power it? Or go full-JAMstack and run a bunch of microservices? The answer is often “no – that doesn’t make sense”.

If you’re building a multi user web application, even a boring CRUD application like most of us, you’re probably using framework that’s good at generating HTML. It probably changes data in the databases when users do things. And it’s probably good at using that data to generate updated HTML.

LiveView just does that in smaller chunks. When you load a LiveView application in a browser, there’s a bit of JavaScript that establishes a websockets connection to the server, then hangs around waiting for the user or the server to do something interesting. When something changes, the servers pushes HTML fragments over the web socket connection, and the client merges them into the DOM.

The effect is slick, and makes it easy to build apps that need to update “likes” in realtime. Or show live metrics for a cluster of servers.

This is all great for server initiated changes. But when users start clicking and tapping and dragging, they start caring about latency. When you show a “like” count with a button next to it, people expect the count to change right when they click the button. A simple LiveView “like” button implementation lags – the user clicks, the browser sends the event to the server, the server does some stuff, then returns the updated count.

If the whole sequence takes under 100ms, it’s probably fine for a like button. Other types of interactions need much quicker responses. If you can keep things under 40ms for most users, you’re doing good.

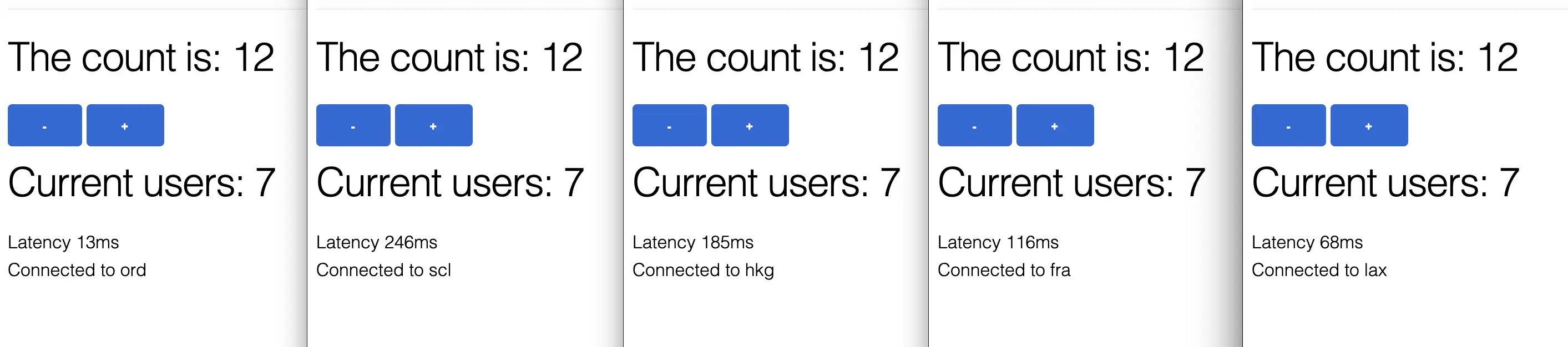

This is what a LiveView app running in Chicago looks like to people living other places. Chicago is one of the most well connected cities in the world, so this is close to best case for a LiveView app.

There are 2.5 basic strategies for improving interaction response times in LiveView. You can (1) predict new state client side, (2) decrease round trip time, and (2.5) show a loading indicator. Loading indicators don’t make interactions faster, but they can work as a placebo in a pinch.

Client side state prediction really just means “we know the previous like count was 500, when a user clicks we can increment that locally, and then display 501”. The prediction might be wrong, but that’s ok because the server will tell LiveView to fix it later. This is how most multiplayer games work. When you tell your little character to move, it moves immediately on your screen, and tells the server what it’s doing. When your internet goes bad, you’ll often see your character move erratically while the local client tries to sync up with server messages. That’s just the universe collapsing timelines into reality.

This is a reasonable way to solve user input latency. It’s more complicated than just letting the server processes do all the work, but it’s absolutely correct when you’re building a first person shooter. When you’re making a CRUD app live, or building slightly less intense collaborative apps, this might be more complication than necessary. Finding a boring option could save you some time.

The boring option when latency is a problem is to make the latency go away. You can accomplish this by either increasing the speed of light or moving your application closer to your users. Faster-than-light networking is not readily available from cloud providers, so it’s best not to count on that.

Faster than light networking

Quantum entagled network interfaces are coming soon (in relativistic terms). Sign up for Fly and deploy apps close to users until it’s ready.

Join the waiting list →

Moving app processes close to users is totally workable, though. It’s very easy if all your users are in one city! You just run your app there. If your users are spread out, it takes a little elbow grease. You just put your app in multiple cities, hook ‘em up over a private network, switch on clustering, and figure out how to route users to the nearest instance.

Clustering is supposed to be harder than flipping a switch. But we’re using LiveView! It’s literally a configuration option. It takes eight lines of config to teach your Phoenix instances to find and talk to each other across a network. The underlying primitives, like Phoenix.PubSub and Phoenix.Presence, handle clustering transparently.

What’s left? Getting your app in multiple cities, networking across regions, and intelligently routing visitors. We these things for you, so you can understand why this article exists. Here’s how we deployed our example LiveView Counter app all over the world:

fly launch

fly regions set iad lax ams nrt syd sin scl

fly scale count 7

Just deploy to Fly.io and it’s done. Or don’t! You can replicate this setup on any cloud run by a bookstore or search engine. The point is, we make it easy and you shouldn’t bother. But we also write about how we do it if you feel like trying it out.